(→Architecture) |

|||

| Line 22: | Line 22: | ||

* 1 SyncNode (only for Primary region) | * 1 SyncNode (only for Primary region) | ||

| − | The following | + | The following diagram illustrates the architecture of this sample multiple datacenter deployment. |

[[File:gws_multidc-arch_852.png|thumb|center]] | [[File:gws_multidc-arch_852.png|thumb|center]] | ||

| − | + | Note the following restrictions of this architecture: | |

| + | * Only 1 SyncNode is deployed within a GWS Cluster | ||

| + | * Each datacenter must have a dedicated list of Genesys servers, such as Configuration Servers, Stat Servers, and T-Servers. | ||

| + | * The Cassandra Keyspace definition must comply with the number of GWS datacenters. | ||

| + | * Each GWS datacenter must have its own standalone and dedicated Elasticsearch Cluster. | ||

| + | * The GWS node identity must be unique across the entire Cluster. | ||

| + | ==Configuration=== | ||

| + | This section describes the steps you must perform to configure your multiple datacenter deployment. | ||

| + | ===Cassandra=== | ||

| + | Configure Cassandra in the same way as for a single datacenter deployment (described earlier in this document), making sure that the following conditions are met: | ||

| + | <ul> | ||

| + | <li> All Cassandra nodes must have the same cluster name in '''application.yaml'''.</li> | ||

| + | <li> The same datacenter name must be assigned to all Cassandra nodes across the GWS datacenter (specified in '''cassandra-network.properties''' or '''cassandra-rackdc.properties''', depending on the Cassandra deployment).</li> | ||

| + | <li> The Keyspace definition should be created based on '''ks-schema-prod_HA.cql''' from the Installation Package, changing only the following: | ||

| + | <ol class=a> | ||

| + | <li> The name and <tt>ReplicationFactor</tt> of each.</li> | ||

| + | <li> The number of datacenters between which the replication is enabled.</li> | ||

| + | </ol> | ||

| + | For example: | ||

| + | <source lang="text" encl="div"> | ||

| + | CREATE KEYSPACE sipfs WITH replication = {'class': 'NetworkTopologyStrategy', 'USWest': '3', 'USEast': '3', 'EU': '3'} AND durable_writes = true; | ||

| + | </source> | ||

Revision as of 15:05, July 6, 2017

Multiple Datacenter Deployment

Starting in release 8.5.1, GWS supports a deployment with multiple (two or more) datacenters. This section describes a this type of deployment.

Overview

A multiple datacenter deployment implies a logical partitioning of all GWS nodes into segregated groups that are using dedicated service resources, such as T-Server, StatServers, and so on.

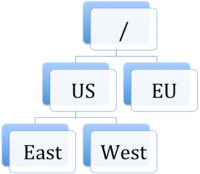

The topology of a GWS Cluster can be considered as a standard directory tree where a leaf node is a GWS data center. The following diagram shows a GWS Cluster with 2 geographical regions (US and EU), and 3 GWS datacenters (East and West in the US region, and EU as its own datacenter).

For data handling and distribution between GWS datacenters, the following third-party applications are used:

- Cassandra—A NOSQL database cluster with multiple datacenters with data replication between each other.

- Elasticsearch—A search engine which provides fast and efficient solution for pattern searching across Cassandra data. Genesys recommends that each GWS datacenter have an independent, standalone Elasticsearch cluster.

Architecture

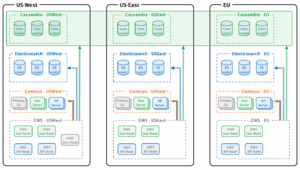

A typical GWS datacenter in a multiple datacenter deployment consists of the following components:

- 2 APINodes

- 2 StatNodes

- 3 Cassandra nodes

- 3 ElasticSearc nodes

- 1 SyncNode (only for Primary region)

The following diagram illustrates the architecture of this sample multiple datacenter deployment.

Note the following restrictions of this architecture:

- Only 1 SyncNode is deployed within a GWS Cluster

- Each datacenter must have a dedicated list of Genesys servers, such as Configuration Servers, Stat Servers, and T-Servers.

- The Cassandra Keyspace definition must comply with the number of GWS datacenters.

- Each GWS datacenter must have its own standalone and dedicated Elasticsearch Cluster.

- The GWS node identity must be unique across the entire Cluster.

Configuration=

This section describes the steps you must perform to configure your multiple datacenter deployment.

Cassandra

Configure Cassandra in the same way as for a single datacenter deployment (described earlier in this document), making sure that the following conditions are met:

- All Cassandra nodes must have the same cluster name in application.yaml.

- The same datacenter name must be assigned to all Cassandra nodes across the GWS datacenter (specified in cassandra-network.properties or cassandra-rackdc.properties, depending on the Cassandra deployment).

- The Keyspace definition should be created based on ks-schema-prod_HA.cql from the Installation Package, changing only the following:

- The name and ReplicationFactor of each.

- The number of datacenters between which the replication is enabled.

For example:

CREATE KEYSPACE sipfs WITH replication = {'class': 'NetworkTopologyStrategy', 'USWest': '3', 'USEast': '3', 'EU': '3'} AND durable_writes = true;